Melbourne is back Online, since it suffered a total data loss on Saturday.

It took a bit longer as expected due to network issues, which now have been solved.

Anyone who has been affected has been notified.

Happy deploying.

Singapore IPv6 has been fixed, enjoy.

In about 2 weeks, the system will check for certain activity on the containers.

Means for you, you should have logged into the panel or container before this update is released.

After this update, you have to login every 60 days, either ssh into the container or login into the panel.

If you fail to meet these requirements, your container goes into a 7 day grace period, afterwards it will be stopped, 7 more days and your container will be deleted.

The reason why I did choose a login based activity is, depending on your use case you may have low traffic,memory or cpu needs, so we cannot really pin it down by that.

We will see how this works and it might be changed later but for now it should be fine.

Maybe configure some e-mail message notification, so users are reminded to login. ![]()

Good idea.

Yesterday, I was just posting it, because I was procrastinating it.

So far no single of code has been written for that feature, will see.

Likely there will be a email notification if you save it under accounts by then, thanks for the input.

I get notifications when I have to pay an invoice a week ahead. I guess a cron PHP (or BASH) script for sending an email notification, triggered by your checks, would be useful. If person decides to continue, then performs the required login, if not… goodbye service.

Similar security for free users exists at @Andrei with HetrixTools, where free clients need to login from time to time, to keep their service active, if I remember correctly.

I want to try this too but the thanked count is only 40 on LET. Sad.

NL has been rebooted for a network maintenance, SR-IOV has been enabled for better network performance.

Maintenance announcement:

- SG will be rebooted tomorrow night, to help troubleshoot the ongoing deployment issues.

All Containers will be automatically started after the reboot, it should not take longer than 5 minutes. - NL will be physically moved to another data room next week, more information will follow

Another Maintenance announcement:

- NL will be moved into another rack on Tuesday, it will be de racked and racked up again so should not take long, no exact time window on that.

- LA, SG and NO have currently issues with the LVM backend, which results in poor I/O performance or failed deployments in SG.

The Plan would be for these locations, to migrate existing containers to a new LVM pool, which should solve these issues.

However, the operation could lead to data loss so I advise anyone if you have a container in one of these locations, take a backup before they will be migrated.

This will take place at the following days:

LA Friday, 16:00 GMT, approximately 1 hour

SG Friday 19:00 GMT, approximately 1 hour

NO Sunday, 19:00 GMT, approximately 2 hours

The Downtime will likely to be shorter for each container, as long nothing comes in-between.

During the maintenance, you won’t be able to control the container via microlxc.net.

After the maintenance stock will be available again on these locations, including NO.

Tokyo is not affected by this, however if we upgrade NL and AU, we likely will performance this maintenance additional or if it becomes necessary.

CH will be not moved to another LVM Backend, since I plan to discontinue it, due to the network related issues.

However don’t plan to discontinue CH until we have a replacement, currently I am still looking for one.

I keep you updated on this.

Patch Notes:

- Zug has been removed from microLXC, existing containers have been wiped after hitting the deadline.

- Antwerp was recently added as replacement for Zug, Thanks to Novos.be

- Package tiny has now increased port speed of 50Mbit instead of 25Mbit*

- Overall I/O limits have been increased up to 100MB/sec*

- Several nodes are using now a local image store, to shorten deploy times and increase reliability

- Removed Fedora 31 since EOL + Fedora 33+ won’t be any longer supported, since dnf runs OOM on 256MB with Fedora 33

- Added CentOS Stream

*New deployments

Which port is available? Can it be used for Pi-Hole DNS?

a003-5f52-2ac1-dbe1

Patch Notes:

- Since the last migration, I missed to update the internal whitelist, which slowed down deployments since, this is fixed

- Fixed IPv6 allocation issue which did result in failed deployments

- Removed strict validation before reinstall if the Container is running, if the Container was stopped the Job was marked as failed

- Increased delay in-between Destroy and Creation (Reinstall) to avoid mount errors

- Only the Packages that are available for a specific Location if you click on it are displayed

- New Locations Auckland and Johannesburg thanks to https://zappiehost.com/

Package Updates:

- 128MB Package has now 2GB of Storage

- 256MB Package has now 3GB of Storage

- 384MB Package is now available in 4 Locations (Melbourne, Antwerp, Dronten, Norway)

The 512MB Package remains the same.

Norway has been rebooted to address the mounting issues.

If your countainer failed to start due to the recent LXD update, you need to start them manually by hand via the control panel. A patch will be applied once it has been started.

Patch Notes:

- removed cooldown on deployments

- removed Fedora, since the package manger needs 256MB+ memory to run

- changed on successful deploy you will now be redirect to the dashboard instead

- added Debian 11, Rocky Linux 8

- fixed LET account issues

Regarding the mount issues, I got a plausible workaround.

Most of the servers are already using the LTS branch for stability reasons.

Which means its bug fix only, so rarely getting any updates, however, these updates are applied automatically.

There seems to be issues, when they are applied, there is a small chance, under specific circumstances these can cause those mounting issues.

It does not affect any running containers nor does it seem to affect any data.

However, if this bug appears, you likely see it when you want to delete the container or reinstall it.

So you can’t do it, because LXD is unable to unmount the container.

According to the developers, its possible to unmount the container by hand, however this method is not working reliability.

The only known working fix, would be rebooting the system.

I don’t expect any fix soon, since the developers can’t even reproduce that bug, but suggest where the issue may be.

Means, we will need to reboot the system now and then, to keep LXC/LXD up to date.

I will announce these reboots a few days ahead, containers will be started automatically, so as long your application is in auto start should be not a problem.

I expect a reboot to happen every few months, the downtime will not be more than a few minutes.

The Kernel will still be live patched as usually.

Currently I know the following nodes are affected and will be rebooted Tuesday 05.10.21 23:00 CET:

Dronten

Antwerp

Other nodes are not affected, as of now.

The bug should disappear, once we do the updates manually.

However, due to the recent breaking LXD update into the LTS branch, you need to boot your container by hand, after this maintenance. Auto start of some containers is not possible, but by starting it manually, a fix will be applied, you just need to do this once.

If you created the container recently, you are not affected by this.

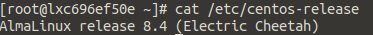

Patch Notes:

- removed Debian 9

- removed Post4VPS Forum (new accounts)

- added Almalinux 8.4

- added Support for static IPv6 configuration (CentOS/Almalinux/Rockylinux)

If static IPv6 configuration is needed, it will be configured automatically - changed HAProxy entries will now be checked if they resolve and point to the Node

- changed 6 months account requirement to 3 months, posts and thanks will remain the same

- fixed Mailserver issues

If the abuses remain on the same level, we keep the 3 months, will see.

Also, the inactivity system will now start stopping contains in the next week, which exceed the 60 days.

1 Week additional grace period, until the system will stop these containers.

Afterwards we will patch the system to delete containers that have been stopped for 1 week after exceeding the 60 days of inactivity.

You can anytime add your email to get notifications, 30, 14, 7 and 1 day(s) alerts will be send before the system will stop your container.

SSH Login is enough, to mark the container as active.

Patch Notes:

- added You will get an email once your container has been stopped due to inactivity.

- added You will get an email once your container has been terminated due to inactivity

- added Termination after 67 Days of inactivity

Your container will get stopped after 60 days of inactivity, plus 7 days grace period, where you can log in and start the container again, if you wish to continue using it. After 7 days (67 days), your container will be terminated by the system.

So even if you don’t subscribe to the notifications and forgot about it, with working monitoring, you should take notice. - removed SSH activity check

Please log in to the Portal instead, once logged in your account will be marked as active.